As competition heats up in the high-bandwidth memory (HBM) sector, leading memory chipmakers are expanding their artificial intelligence (AI) semiconductor strategies to include Compute Express Link (CXL), an advanced memory interface technology. The shift reflects surging demand from Big Tech firms for next-generation AI data centers, where the ability to process massive volumes of data efficiently has become increasingly critical.

The servers in these datacenters usually include various types of semiconductor parts such as CPUs, GPUs, and DRAMs. CXL is an advanced interface aimed at improving data exchanges amongst these elements. This technology allows for enhanced performance using less hardware, which can lead to reduced expenses for infrastructure. An industry representative commented, “Not only does it increase memory capabilities, but it also greatly enhances the speed of data movement between different semiconductors.” That’s why it has emerged alongside HBM as one of the key technologies attracting significant attention during this age of artificial intelligence.

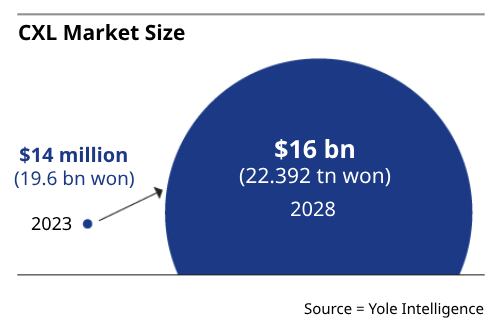

Samsung Electronics and SK Hynix from South Korea, along with the United States-based Micron Technology, which is the newest among these top three memory manufacturers, are stepping up their efforts to take an early lead in the CXL memory market. Market analysis firm Yole Intelligence forecasts that the worldwide CXL market will expand significantly from $14 million in 2023 to approximately $16 billion by 2028.

At Cxl DevCon 2025, which took place on April 29 in California, Samsung Electronics and Sk Hynix presented their most recent advancements in CXL technology. This conference, now in its second year, is organized by the CXL Consortium, an international group comprising various semiconductor firms.

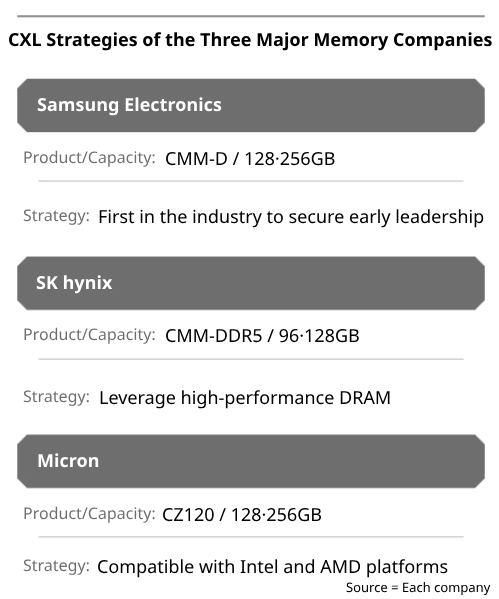

During the conference, Samsung demonstrated their memory pooling technology, utilizing CXL to connect several memory modules into one unified resource pool. This allows users to dynamically manage and assign memory assets according to demand. As a pioneer in this area, Samsung created the world’s first CXL-enabled DRAM back in May 2021. By 2023, they launched a 128GB DRAM module compliant with the Cxl 2.0 specification, completing client verification by year-end. Currently, Samsung is gearing up to finish testing for a 256GB variant. “Samsung aims to dominate the CXL sector to prevent losing momentum in the HBM domain,” noted someone familiar with the industry.

SK hynix, which holds a competitive edge in HBM, is seeking to apply that momentum to the CXL space, focusing particularly on its high-performance DRAM capabilities. On Apr. 23, the company completed customer validation for a 96GB DDR5 DRAM module based on the CXL 2.0 standard. “When applied to servers, this module delivers 50% more capacity and 30% higher bandwidth compared to standard DDR5 modules,” a company representative said. “It’s a technology that can dramatically reduce infrastructure costs for data center operators.” SK hynix is also pursuing validation for a 128GB variant.

Last year, Micron Technology, which ranks as the globe’s third-biggest producer of memory chips, started introducing memory expansion modules based on CXL 2.0 technology, stepping up its efforts to narrow the tech divide with competitors like Samsung and SK Hynix.

The growth of CXL is part of a larger shift in AI development—from focus on training-intensive models towards inference-focused designs. Up until now, AI effectiveness was primarily determined by the amount of data a model could process during the training period. During this phase, hardware configurations featuring GPUs coupled with HBM, similar to what you’d find in NVIDIA’s AI accelerators, were highly advantageous.

Today, the emphasis has turned towards inference-driven AI models. Unlike traditional ones, these systems do more than offer predefined answers from their training; they can produce novel outputs via logical deduction even without direct support from the initial dataset. Such tasks demand both extensive datasets and swift, effective computation—a capability that CXL architecture aims to excel at delivering. Consequently, as the necessity for streamlined data management escalates within the AI industry, so does the burgeoning enthusiasm among professionals toward adopting this cutting-edge memory interface technology.